connecting:stitches

completedFellowship

SummaryIn “connecting:stitches” costume designer Luise Ehrenwerth explores the possibilities of bringing together costume making and digital technologies. Her focus lays on electronic textiles and sensors made of conductive materials that are connected to microcontrollers, as well as the implementation of textile AR markers in costumes. In addition to the question of what role costume design plays in the theater of digitality, the project is also about the search for changing body images in a digital (art-)world.

General Research Questions

- How is digitality influencing our body understanding and how could digital technologies change the art of costume making?

- Is there a digital body and if yes, how is it different to an analogue one?

- What is a digital costume – and is a digital costume still a costume?

- What are the new narrative possibilities for storytelling when extending a costume through digital technology?

Hands-on Research Questions

- Which textile manufactoring methods work out best as image markers for Augmented Reality? How can they be integrated in a costume?

- How to make a virtual costume with Blender? How is the process of 3D modeling a costume different from the usual (analogue) workflow of costume creation?

- What is needed to make the costume an active digital play partner (a textile interface), with which one could change lights or sounds in the scenographic surroundings?

Fields of Research

Augmented Reality and Costumes

First of all there is the plan to produce different types of textile image marker for Augmented Reality, that could be integrated in the costumes and reveal a digital costume overlay (or any other information) in AR. For that experimental set-up diverse techniques of handcrafting will be used, like stitching, patchworking or textile printing.

eTextiles

Then, secondly, there is the interest in eTextiles and especially textile sensors made of conductive materials. With those implemented in a costume, the wearers would have a textile digital interface with which they could change things in their surrounding (like lights or sound). The idea is to enhance the costume's status from an non-technological “clothing” to an active digital play partner.

Virtual Costume Making

The third topic refers to digital only performances like VR or other virtual theater formats. How can costume designers still be part of the creative process, especially if they don't have the 3D-modelling or programming skills? How differs their artistic work from classical costume making when the goal is to design a costume for a digital theater piece?

The Interactive Augmented Reality Costume

Coming closer to the end of my fellowship I started to think of a way to combine the three fields of my research in one costume project. The following pictures show drafts of a possible communication between the costume (and its wearer) and an Unity AR app on a smart device (held by a participating audience member).

In the beginning there seems to be just a 'normal' costume... but when the tablet tracks the textile AR marker implemented in the fabric...

... LEDS light up in the costume. They are attached to a microcontroller that communicates with the Unity app on the smart device. The lit-up LED informs the wearer of the costume that the tracking of the AR marker worked and that the audience member can see the virtual content on the tablet.

In addition to the lights in the costume, there could also be switches, buttons or any other (eTextile) sensors, that give the wearer of the costume the possibility to change the AR content on the tablet (change the object, change the colour, change the size…).

The costume is now a digital interface that functions as real time controller of the experience for the holder of the smart device. But it is also possible to give the person with the smart device the opportunity to communicate with the intelligent costume and to interact with the AR objects on the costume (e.g.)

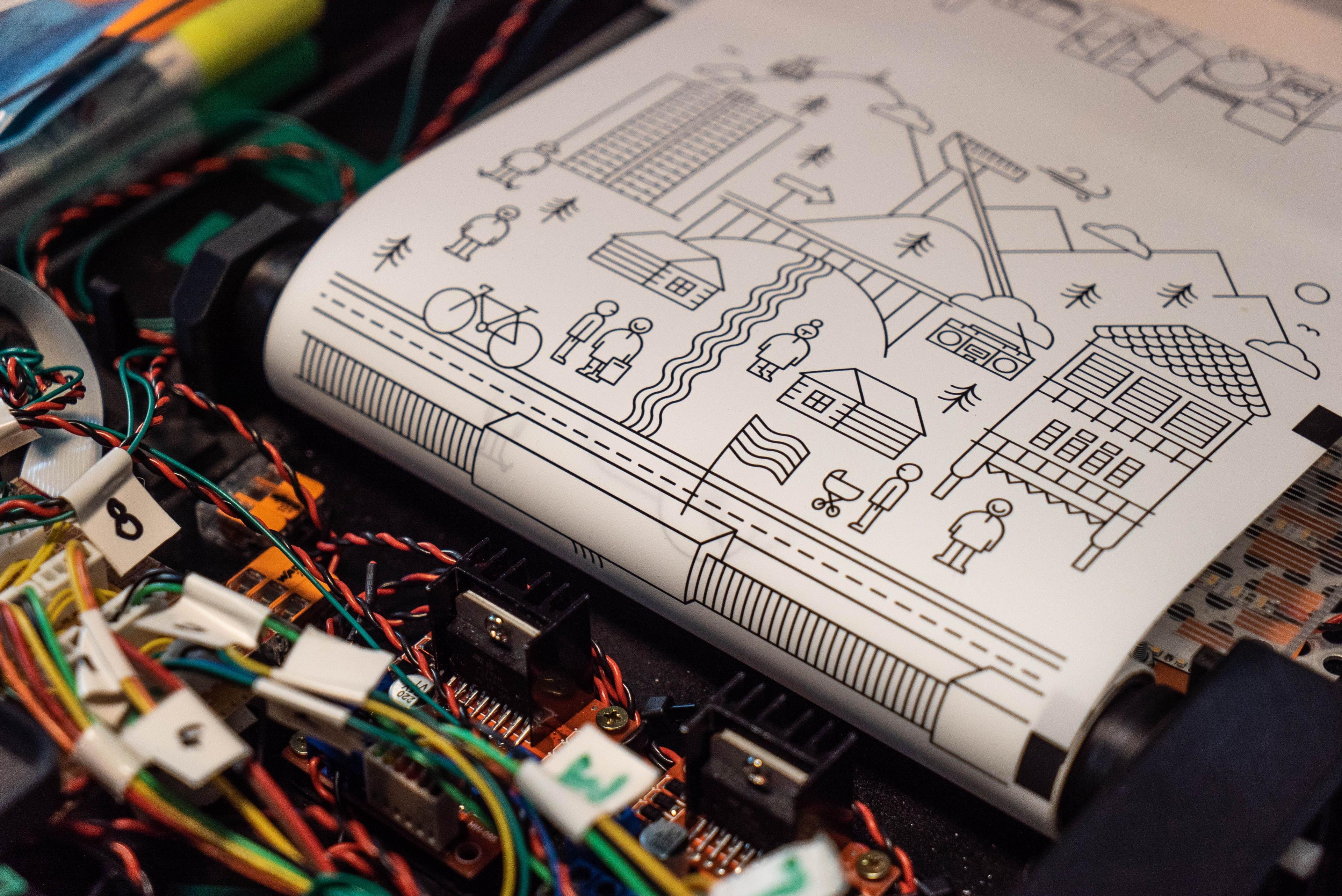

Workshop on MQTT and microcontrollers

In the fourth month of my fellowship I was given a 3-days workshop by Anton Kurt Krause, who is a theater director and software developer. I learned about network technology and how to set up a MQTT-Broker on a Raspberry Pi. Using MQTT Mosquitto gave me the possibility to connect microcontrollers in the costume with an AR Unity App on a tablet.

This chart shows the technical setup of my project and gives an basic overview how the MQTT machine-to-machine-communication connects multiple technical clients (microcontrollers, raspberry pi, Unity app on a tablet) in one network:

Making the interactive costume prototype

Based on this machine-to-machine-communication technology I combined my fellowship learnings on microcontrolling (eTextiles), Augmented Reality (Unity) and 3D modelling (Blender) in one costume project:

How it's made...

In one sleeve are six Adafruit Neopixel RGB LEDs, connected to an Adafruit Feather S2. This microcontroller works with an ESP32 chip, is wifi-able and can connect to the network and the MQTT broker. It is also small and easy to hide in the costume. Unfortunately it does not have big pin holes for easy sewing, like the Adafruit Flora or the Lilypad Arduino. Therefore I sewed it to the fabric for permanent usage.

My original plan was to put a second microcontroller (Tiny S2, even smaller than the Feather S2) in the other sleeve and to attach to it six ON/OFF-switches using common metallic snap buttons (metall = conductive). It took me some days to finish the soft circuit in the sleeve and to put everything together - and it worked very well in the beginning!

But later on, the circuit started to cause problems. Little shortages, probably caused by the single traces sewed with conductive thread touching each other when moving the sleeve in the wrong direction. I came to the conclusion to redo it all from the beginning and to put the microcontroller with the snap buttons in the pants of the costume.

I also used the Adafruit Feather S2, too, and not the Tiny S2 as before in the sleeve. The GPIO pin holes of the Tiny S2 are so close to each other, that makes it really hard to make sure that the conductive threads are not touching. The Feather S2 is only slightly bigger and the distance between the GPIOs is bigger, too. Also, redoing it all I chose GPIOs with at least one spare one in between.

In the pants the circuit worked much better and reliable - I came to the conclusion, that the sleeves may not be the best part in a garment to implement electronics, because we just move our arms way too much.

This is the circuit piece before sewing it in the pants. This time I took better care of isolating the traces of conductive threads from each other, sewing “cable tunnels” with non-conductive, normal thread. Also, I sewed everything with the sewing machine (using the conductive thread as lower thread!), what makes the traces more rectilinear.

With the costume as it is the wearer can decide, what objects are visible in the AR app on the tablet by closing or opening the metallic snap buttons in the pants. Each of the snap buttons is attached to one virtual AR object in the app. If all six buttons are closed, all six AR objects are visible in the virtual space. Opening a button “hides” the virtual object. The Neopixel LEDs in the right sleeve are aligned to the six snap buttons: Snap button one - LED one, snap button two - LED two, and so on. When the button is closed, the LED turns blue.

The person with the tablet has six buttons him*herself on the Userinterface in the app. Clicking on those buttons changes the colour of the aligned LED (one to six) to red - which tells the wearer of the costume that the tablet holder wants to change the actual state of the snap button (either opening or closing it).