The (Un)Answered Question

completedHIDA

SummaryBased on the poem 'The Sphynx' by Ralph Waldo Emmerson and the piece 'The Unanswered Question' by composer Charles Ives, a prototype performance has been explored and developed. Bio data of the participants (audience and performers) are collected and clustered by an intelligent algorithm which is then processed into video projections and a live orchestra remix of the original composition, that features a new score (streamed as single parts to tablets for the musicians) and live audio processing.

Abstract

This research project seeks to explore the application of Data Science to make the uniqueness of each live performance tangible in a special way. It involves combining certain methodologies and techniques from this field with conceptual and compositional ideas from theater and music.

Based on the poem 'The Sphynx' by Ralph Waldo Emmerson and the piece 'The Unanswered Question' by composer Charles Ives, a prototype performance has been explored and developed. Bio data of the participants (audience and performers) are collected and clustered by an intelligent algorithm which is then processed into video projections and a live orchestra remix of the original composition, that features a new score (streamed as single parts to tablets for the musicians) and live audio processing.

This results in an immersive and exclusive live experience.

About

HIDA/ATD and the project partners within the Helmholtz Association

This research project has been carried out within a joint fellowship program between the Helmholtz Information and Data Science Academy (HIDA) and the Academy for Theater and Digitality (ATD). The program aims to artistically make the field of Data Science accessible to the public, as well as to ensure that cutting-edge research into artificial intelligence serves as a source of ideas and inspiration for the next generation of theater and cultural professionals.

Two research groups from different Helmholtz Centers contributed to this project:

Berlin Ultrahigh Field Facility, Max Delbrück Center for Molecular Medicine (MDC), group of Prof. Thoralf Niendorf.

The Institute for Software Technology, Department Intelligent and Distributed Systems, German Aerospace Center (DLR), department heads: Andreas Schreiber, Carina Haupt

Martin Hennecke is a German percussionist, composer and since 2009 the Associate Principal Timpanist at Saarland State Orchestra.

With his ensemble 'Percussion Under Construction', he has been creating opportunities to perform the best percussion repertoire through the development of a series of full length live shows and mixing solo with ensemble percussion literature extending from the classic masterpieces to contemporary works including his own compositions. His music (mostly percussion, orchestra and electronic pieces) have been performed at venues like Philharmonie Luxemburg, the UNESCO World Heritage Site Völklinger Hütte and festivals like Euroclassic, SubsTanz, Zappanale etc.

He is a sought after timpanist and percussionist and has been invited to work with about 1/5th of the 129 professional orchestras in Germany, e.g. the Bamberg Symphonic, Bremen Philharmonic, Frankfurt Opera, Gürzenich-Orchestra Cologne, Hannover State Orchestra, Baden State Orchestra Karlsruhe, Nuremberg State Orchestra and the radio orchestras of WDR, SWR and SR, as well as orchestras outside Germany like the Orchestre Philharmonique du Luxemburg or the Qatar Philharmonic Orchestra.

At the 2013 German Music Competition, he was awarded with the coveted scholarship and invited to participate in the concert series 'National Selection of Young Artists'. Martin Hennecke also is a member of the committee for theater and concert orchestras at the German Musician’s Union and is teaching at University of Music Saar.

Timetable

Phase I - Establishment of concept and research questions at Academy for Theater and Digitality (ATD) Dortmund (five weeks, 06.09.21-08.10.21), audio and video recording of strings, winds and trumpet (featuring musicians of Dortmund Philharmonic Orchestra, 01.10.21), MRI (featuring a actor of Saarland State Theater) at Berlin Ultrahigh Field Facility, Max Delbrück Center for Molekulare Medicine, research group Prof. Thoralf Niendorf (04./05.10.21).

Phase II and Phase III - Further research (five weeks) and development of technical solutions (seven weeks) at German Aerospace Center (DLR), Institute for Software Technology, Department Intelligent and Distributed Systems, research group Andreas Schreiber (17.01.22-10.04.22).

Phase IV - Further development and prototype performance at ATD Dortmund (three weeks, 01.05.22-23.05.22, prototype performance 17.05.22).

Initial Questions (e.g.)

- Is it possible to create pieces for theater/opera/orchestra, that will be different every night, depending on who is performing and watching?

- Different humans are attending a performance every night (performer and audience). Can we utilise this resources of “varying data”?

- Can this data be processed by approaches we know from the field of Data Science?

- Could this change a performance, e.g. by varying musical parameters (“remix”) or visuals?

- What kind of (biomedical and biographical) data can we collect? How can these be processed artistically? How does the data go into the DAW/video program? How is it made performable for the musicians?

- To what extent can one build on projects already carried out by the Helmholtz partners?

- Can biomedical imaging and AI be used to find connections between vital signs and poetry/music and can that be used artistically?

- Is it possible to find connections between “inspiration” and “subordinate work” through Deep Data, and again use that artistically?

Research Goal

This research project explored and developed software and technological solutions to find ways to make the uniqueness of each live performance tangible in a special way by using Data Science and conceptional and compositional ideas from theater and music .

This has been approached through the development of a three part prototype performance, where bio data of audience and performers are being used as the primary data. The data is clustered and as a result influence the orchestra liveremix of an existing composition, featuring a new score, spoken word, live audio processing and visuals:

Part I

An actor recites the poem 'The Sphynx' by Ralph Waldo Emerson (1803-1882). While reciting, an MRI-video of the actor’s heart, which has been taken beforehand (also while reciting) at Berlin Ultrahigh Field Facility, will be shown in sync.

In the context of the Oedipus myth, the Sphinx is said to have guarded the entrance to the Greek city of Thebes, asking a riddle to travellers to allow them passage. If they could not answer, she would devour them (Edmunds, Lowell. The Sphinx in the Oedipus Legend, Hain: 1981).

“Thou art the unanswered question;

Couldst see they proper eye,

Alway it asketh, asketh;

And each answer is a lie

So take thy quest through nature,

It through thousand natures ply

Ask on, thou clothed eternity;

Time is the false reply.”

Excerpt of 'The Sphynx', Ralph Waldo Emerson

Part II

An orchestra performs 'The Unanswered Question' (revised version 1930/1935) by Charles Ives, which features compositional techniques particular to the twentieth century (such as “reordered chronologies and the layering of seemingly independent material”) and has been inspired by 'The Sphynx' - in particular in terms of “the language, imagery, structure, and worldview” (McDonald, Matthew. Silent Narration? Elements of Narrative in Ives's The Unanswered Question. 19th century music Vol. 27, Issue 3: 2004). Meanwhile, bio data of the audience is being collected: The heartbeat, a questionnaire about empathy and data of the emotional state via facial recognition.

“The strings play ppp thoughout with no change in tempo.

They are to represent “The Silence of the Druids -

Who Know, See and Hear Nothing”.

The trumpet intones “The Perennial Question

of Existence”, and states it in the same tone of

voice each time. But the hunt for “The Invisible

Answer” undertaken by the flutes [the woodwinds]

and other human beings, becomes gradually

more active, faster and louder (…)”

Foreword to the 1953 print edition by Charles Ives

Part III

The sets of data from part I and II are being clustered together, utilising an intelligent algorithm and the data inside the cluster as well as the MRI videos are being used to create a live orchestra remix. Also, the collected data is processed into a visualisation.

Development

Special credit to the Helmholtz and ATD experts.

Collecting the Data

Several kinds of bio data have been explored for creative potential and also practicability:

* Heart frequency using electrocardiogaphy

* Heart frequency using optical heart sensors

* Electrodermal activity (skin conductance response)

* “Brain waves” using electroencephalography

* Biomedial imaging using MRI

* Active audience input (“pushing buttons”)

* Several psychological evaluations

After balancing pro's and con's (mostly for practical reasons but also e.g. “is there enough variety in the data so that the performance is different when different people are attending”), four kinds of data were picked to be pursued further for this project:

1. Biological imaging taken by an MRI. An experimental high resolution (1.1mmx1.1mmx2.5mm) Cardiac MRI at 7.0 tesla was used to create a four chamber view of the actor's heart, while he recited the poem, verse by verse. Some testing had to be done to find the right resolution and a nice angle. This particular MRI is capable of a 0.8mmx0.8mm resolution, but it turned out that this collected too much background noise for a big screen projection (Prof. Niendorf, Thomas Eigentler, Lisa Krenz MDC).

2. Heart frequency using fitness tracker. 200 Xiaomi Mi Bands are transmitting the heart frequency of the audience. A python script has been written to collect and process the data (Sophie Kernchen, DLR).

3. Emotional state by facial recognition. Two cameras (left and right) are catching the faces of the audience. The open source software deepface is used to analyse the probability of the following emotions: happy, sad, angry, disgust, fear, surprise and neutral. Issues to solve for using this technology on a theater audience were making sure the audience is bright enough for the cameras to recognise 200 faces, data privacy protection and the corona mask mandate (Sophie Kernchen, DLR).

4. Audience questionnaire about empathy. A questionnaire via https://www.limesurvey.com, made accessible through a QR-code, gives the audience the opportunity provided data about their level of empathy, figuratively how “big” their heart is. The biggest issue here also were privacy concerns. It seems, that almost all evaluation websites hosted outside of the European Union can't comply with the standards of the European General Data Protection Regulation (David Heidrich, DLR).

Clustering

The first idea of training a neural network during the development phase turned out to be not feasible because there obviously wasn't enough test data before the performances. This might me something to be investigated in the future.

So a different approach has been taken: These four sets of collected data are being merged and clustered as following: Heart frequency actor (MRI) with heart frequency audience (Mi-Bands), and emotional state with the empathy questionnaire, using the K means algorithm, which is a method of vector quantisation, originally from signal processing, that aims to partition n observations into k clusters in which each observation belongs to the cluster with the nearest mean. (Lynn von Kurnatowski, DLR).

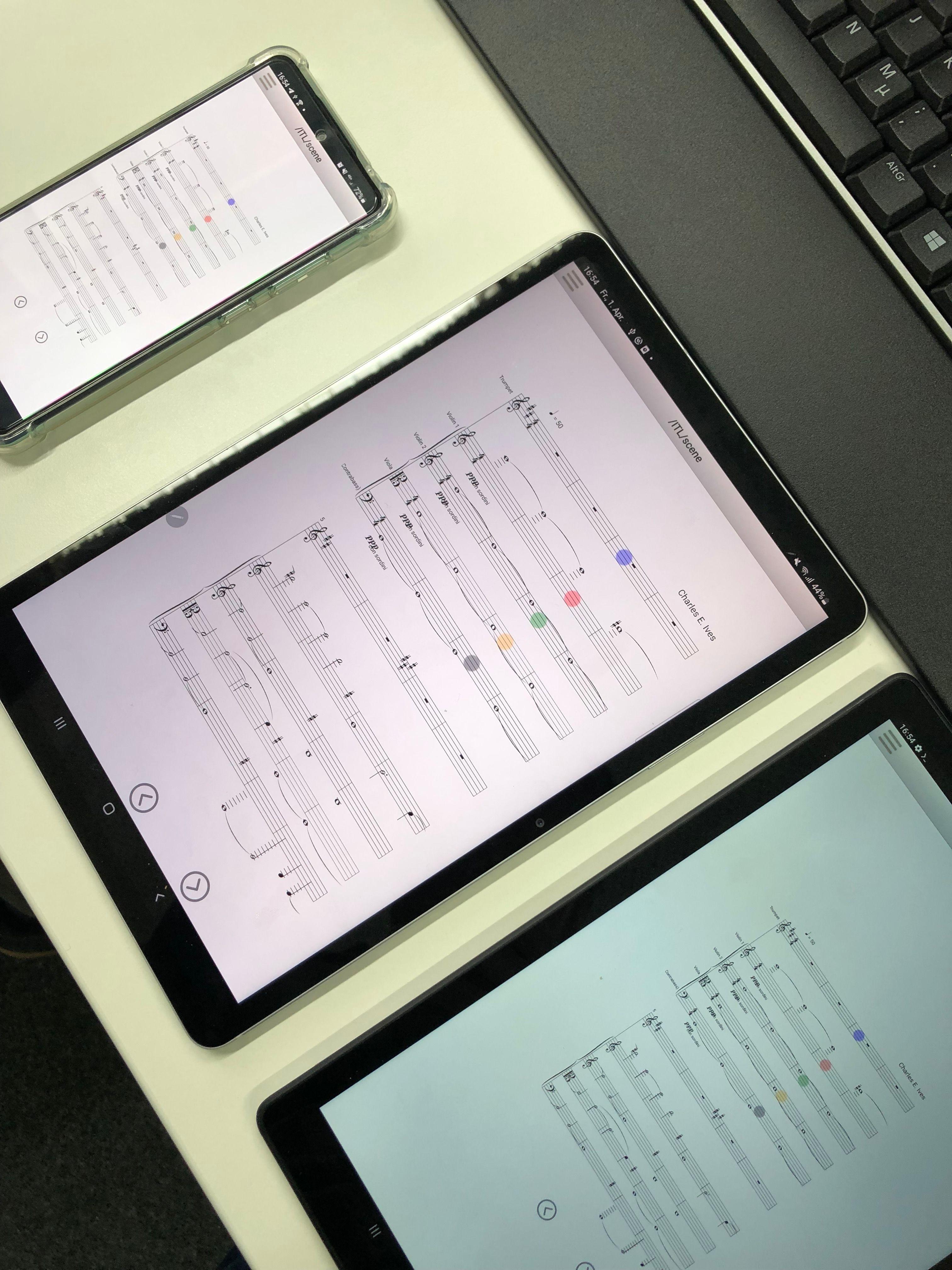

Score Remix and Score Distribution

For this project, a “ScoreMaker” has been developed, using Python3 and the Open Sound Control Network Protocol (OSC). Data points of the control several different variables of the remix (for example “Which rhythm are we applying to this bar in which staff?”). The full score is being rendered, then distributed as single part scores to several tablets. The tempo is being indicated by a synchronised cursor above the notes. It features the Guido Music Notation by Holger H. Hoos and Keith Hamel and the software INScore INScore (version 1.31), which was initiated by Dominique Fober and the Interlude project. The Center of Music and Film Informatics at Detmold University of Music provided valuable information on several digital score viewing software. However, the combination of “ScoreMaker”, INScore and Guido seemed to be most promising, especially in terms of how quick a music score could be remixed instantly (Benjamin Wolff, DLR).

In addition to the new score for the musicians, certain parts of the poem are triggered by cluster data. The data points are being send via OSC over a time series into Ableton, using a python based OSC-Player. The cluster controls which verse of the poem, is included in the remix at any random day. Important tools proofed to be pythonosc and guidolib (Philipp Kramer, ATD).

Also, the sound of certain sections of the orchestra is being manipulated electronically. The woodwinds and trumpet are being picked up by microphones and their signal is being altered using a video mapping of the MRI-heart to control parameters and faders in Ableton. This was archived by existing max4live-devices.

Visualisation

In the third part of the performance, the previously collected data is being visualised and projected on stage, using the game engine Unity. The MRI-video of the actor is used (and also manipulated by audience data), as well as the development of the heart frequency and emotional state of the audience. (Adriana Rieger, DLR).

The Prototype Performance

The prototype performance took place on May 17th 2022 at ATD as a scaled down version with a test audience. The clustering, the “Scoremaker” including streaming to tablets on musical stands, the “OSC-Player”, sound manipulation and the visuals were tested with real audience data from Mi-Bands, facial recognition and questionnaire. The score remix was made audible by a midi mockup. Takeaways were some minor artistic improvements and a better understanding how the clustered data should be made available in order to bring it all together. The amount of audience data input let to some problems with the merging script. The development of a “sync script” (that sends the newly generated scores to the tablet, starts the cursor playback, “OSC-Playback” and the visuals) had been postponed due to time reasons, the performance showed that it is needed. The improvements are scheduled to be fully implemented in Summer 2022.

The first full public performance with orchestra will be on November 8th with Saarländisches Staatsorchester at Alte Feuerwache Saarbrücken.

Acknowledgement

Acknowledgement goes to the Helmholtz Information & Data Science Academy (HIDA) for providing financial support enabling a short-term research stay at Max Delbrück Center (MDC) to learn about biomedical imaging and provide the MRI and a three month research stay at the German Aerospace Center (DLR) to develop technical solutions for this project and create the required software.

A special thank you to Prof. Thoralf Niendorf, Thomas Eigentler and Lisa Krenz of MDC; Andreas Schreiber, Carina Haupt, Benjamin Wolff, Lynn von Kurnatowski, David Heidrich, Adriana Rieger and Sophie Kernchen of DLR; and Marcus Lobbes, Michael Eickhoff and Philipp Kramer of ATD; Aristotelis Hadjakos and Matthias Nowakowski from the Center of Music and Film Informatics at Detmold University of Music; and Simone Kranz of Saarländisches Staatstheater.

The research stay as part of the fellowship program at Academy for Theater and Digitality (ATD) in Dortmund is funded by the Wilo-Foundation.

Project developed @AKADEMIE FÜR_THEATER_UND_DIGITALITÄT.